Faculty support

Successful collaborations

Ecampus partners with 11 OSU academic colleges and 1,300+ faculty members each year.

Artificial Intelligence Tools

Advancing meaningful learning in the age of AI

This work is archived. A 2024 version of Bloom’s Taxonomy Revisited (Version 2.0) is now available.

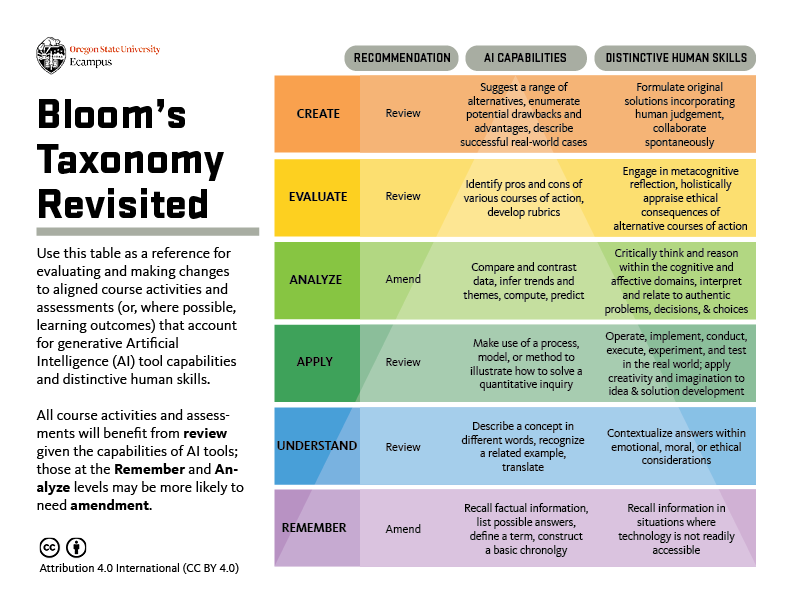

Bloom’s Taxonomy is often used as a resource to help higher education faculty assess what kinds or “levels” of learning are planned based on course-level outcomes and, relatedly, to align appropriate activities and assessments to support student learning and success. Here, we have used Bloom’s Taxonomy as a touchstone for reconsidering course outcomes and student learning in the age of generative AI. The visual outline and accompanying narrative below are intended for faculty use as a guide to reflect on their activities, assessments, and (possibly) course outcomes and begin to identify what changes may be needed to ensure meaningful learning going forward.

Download PDF

Considerations for each level of Bloom’s Taxonomy

Below, we break out each level of Bloom’s Taxonomy independently to unpack:

- how students might use AI tools for learning activities and assessments at this level, with attention to examples relevant to asynchronous, online courses;

- distinctive human skills that faculty can continue to emphasize and evaluate in learning at this level, which may guide thoughtful revision of course activities and assessments (and potentially learning outcomes);

- additional notes that may be helpful for faculty as they consider how to adapt to teaching and learning in this new age.

Considerations that we offer at each level can be useful regardless of whether faculty are inclined to encourage, discourage, or restrict student use of AI in learning processes in their course. For example, a faculty who decides to discourage AI tool use might use this guide to identify which discrete assignment tasks students might be tempted to use AI support for; the faculty might then alter their assignments to clarify why students need to practice those tasks without assistance. Conversely, a faculty who decides to explicitly require students to consult an AI tool’s output and then strategically revise it might consider the distinctive human skills related to that task and adapt them into explicit grading criteria to ensure that students do revise the AI output.

Also of important note is that this guide has been created at and for a particular moment in time (summer 2023), and through reviewing literature on AI capabilities and our own experimentation. The examples we include below of how students might use AI tools are, by necessity, not comprehensive and meant only to provide insight into ways that students are using the tools currently. Furthermore, as AI capabilities grow and evolve, the distinctive human skills to emphasize and the additional considerations that we provide may need to shift accordingly. We encourage faculty to exercise caution in testing assumptions of what AI “can” and “cannot” do through research and experimentation. Many of our assumptions were debunked as we put this tool together — such as the notion that only activities, assessments, and outcomes at the bottom levels of Bloom’s Taxonomy may need revision.

Finally, the levels of Bloom’s Taxonomy are offered intentionally in reverse order below to align with the related complexity of interactions with AI tools — beginning with simple prompting and moving toward more engaged interaction.

Remember

At this least-complex level of learning activities and assessments, students might use AI tools to look up basic facts about historical events or about character and plot line information in a literary text; to list possible answers or correctly select a category that a concept belongs to; to identify the correct definition of a term or chronological application of a concept; to outline related pieces of information, such as in a taxonomy; or to select the best response from a list.

AI tools are already very strong at producing accurate responses to prompts for basic information that is widely available on the web — much better than Google searches previously and with less effort required from the user; for this reason, in the visual outline above, this level on Bloom’s Taxonomy is marked with a recommendation of amend, as learning activities or assessments at this level are more likely to warrant revision.

Faculty may want to consider if students will be expected to have these skills as foundational knowledge, such as doing simple calculations on the spot. Additionally, faculty might consider if there are any situations in which students would be without internet access and would need to recall detailed information for school, work, or personal purposes. For instance, a student doing field work counting fish in a remote area may not be able to access AI or other tools to help identify species. Students may not feel motivated to memorize information if such situations don’t or won’t exist for them.

Understand

Working on activities and assessments at this level, students might use AI tools to help them describe a concept in different words; to recognize an example of a specific idea; to explain a general theory in a specific context; to translate text from one language to another; or to relate a principle or concept to their own experience. Notably, when prompted with contextual information (such as providing personal details), AI tools can construct believable stories about human experiences, which is an important consideration for learning activities and assessments at this level and other levels of Bloom’s Taxonomy.

Faculty may choose to emphasize distinctive human skills by contextualizing learning within the affective domain and incorporating relevant emotional, ethical, and moral perspectives into the curriculum. For example, AI may not be as capable as human thinking to explain the reasoning for respecting team members’ pronouns in a group setting, or to infer subtext in a translated speech.

Faculty may also want to consider how students learn, grow, and develop from opportunities to make their own connections to content and applications at this level, and whether these opportunities are a significant loss if offloaded entirely or partially to AI tools; understanding how learning is built across practice tasks might be something to share more explicitly with students. Asking students to contextualize work at this level within learning materials and resources specific to the class and/or peer and instructor comments may also help students to stay focused on doing this cognitive work entirely (or mostly) from their own efforts.

Apply

Students are asked to demonstrate foundational skills related to “application” in their academic careers, such as writing, problem-solving, and decision-making, and these kinds of tasks are present across most courses in higher education. All of these tasks can be supported by the use of AI. AI tools can help students to apply problem-solving processes to industry-related challenges, and they can even create fictional scenarios where students can apply methods or models (e.g., SWOT) to make informed decisions. Students may use AI tools to support their writing process in the context of informal/low-stakes (e.g., journals, reflections, discussion boards) or formal/high-stakes (e.g., research papers, marketing plans, applications, etc.) products; for example, these tools can help a student to brainstorm, outline a paper, summarize sources, draft, and revise.

Despite the support that AI can provide, these tools cannot critically think within the context of the individual’s interests and experience or within the course. For that reason, clearly articulating the purpose of assignments (the “why”) to drive student motivation and offering opportunities for students to make meaningful personal and real-world connections are best practices that faculty should continue to employ. A student stitching together their own ideas and supporting them in the context of existing expertise is critical to advancing learning and knowledge creation.

Regardless of a faculty member’s position on AI use in their course, a key consideration should be how AI use is framed to students, particularly on application-level tasks. A faculty member may choose to communicate how AI can augment rather than take the place of learning and growth. But, balanced messaging might also prompt faculty to highlight the potential for AI tools to undermine student skill development, if not used judiciously. Considerations about disciplinary and relevant industry standards (e.g., what the field has described as standards for AI use or non-use) may also influence and justify faculty choices related to these kinds of common learning tasks.

Analyze

Students can now collaborate with AI tools on advanced processes such as data analysis. For example, a student can submit a data set, and the AI tool can merge, clean, calculate mathematical equations, compare and contrast information, identify trends, categorize outputs, and structure data. Students may guide, refine, and iterate on the AI analysis using sophisticated and targeted inputs, or analyze its methodology. Students may also use AI capabilities to support their learning and comprehension of the data, including the creation of data visualizations (e.g., charts, diagrams, mind maps, graphs, etc.). Human biases that can unintentionally skew analytical work may be reduced when work is completed by AI systems, though AI may introduce bias as well. AI’s general proficiency in particular aspects of analytical tasks prompts us to indicate that activities and assessments at this level are more likely to require amendment.

Yet, no analytical work is entirely computational and there is much room yet for faculty to emphasize reflective pieces of the analytical process into which AI will have only a narrow perspective. For example, students could be asked, “What is missing?” “What needs to be added or adjusted?” “Are there any gaps?” “How is the AI making these determinations?,” or ”Do I agree with the analysis? Why or why not?”

Faculty revisiting course activities and assessments at this level may want to think holistically about analytical skill development, remembering connections to program-learning outcomes or additional objectives that aim to prepare students to think and reason as practitioners and researchers do within their chosen domain or industry. Situating analytical tasks within authentic and experiential learning contexts may also be helpful in promoting student engagement in these tasks

Evaluate

At this level of learning, students may be asked to critique, appraise, or judge. These are areas in which generative AI is useful, for example, to provide context and identify both strengths and weaknesses of creative works, working hypotheses, or alternative courses of action. Across the curriculum, students may use AI to assist in developing criteria, standards, or rubrics to provide a basis for evaluation, or to provide an evaluation of their work against a faculty-provided rubric.

Distinctive human capacities for self-awareness, metacognitive reflection, and empathy are particularly applicable to learning at this level. Generative AI does not replicate the qualities of human consciousness, such as self-interrogation and self-assessment, that are brought to bear in many evaluative processes.

Faculty may focus on communicating the ways in which AI has strengths in evaluative processes but also falls significantly short of human capacities and qualities in this area. As with assignments at other cognitive levels, balancing and possibly blending the use of generative AI in learning activities with human cognition and metacognition could afford an augmented learning experience that elevates and rewards higher-level thought processes that students engage in after or alongside their collaboration with an AI tool. Students could also benefit from learning to critique the output of generative AI itself.

Create

At this higher order of learning, students may be asked to produce original work such as designing a product, process, or plan. For instance, in creating elements of a management plan for public lands, an AI tool might be used to kick-start the creative process by suggesting a range of alternative scenarios to potentially sustain economic, recreational, environmental, and aesthetic values. A generative AI tool that incorporates a search engine (such as Microsoft Bing) could be prompted to provide both recent and historical information and sources, to enumerate potential drawbacks and advantages of each scenario, and to describe successful real-world cases that resemble each scenario. A number of AI tools also have emerging strengths in creative products, such as image blending and creation and creative writing.

Distinctive human skills come to the fore in constructing novel solutions to problems, whether real or hypothetical, and artistic works that reveal something unique about human perspectives and the human condition. Creativity is a deeply human trait. In the example above, individual students or collaborative groups would apply human judgment (involving application, analysis, and evaluation) to put together potential elements of management scenarios to create a workable, coherent plan. While generative AI is capable of creating meaningful text and images, students can excel at developing original ideas, exercising moral judgment, and collaborating in both spontaneous and structured processes.

Faculty might consider communicating the value of balancing human capacities with the assistance that AI can afford in the creative process. As students become adept at prompt engineering (specific initial prompts and extended follow-up prompting to refine the AI output), judicious use of AI has potential to accelerate and broaden human learning experiences and to augment creative processes.

Conclusion

Daily life and the workforce are increasingly being saturated with AI-enabled technologies, and it is important to understand how students may already be able to use these powerful tools. This changing landscape prompts all of us in higher education to revisit our intended outcomes as well as teaching and assessment practices for students, and we hope that this information can help inform decisions at the course (and program) level about potential changes. Other sections in this guide will support next steps for small-scale revisions that faculty might make on their own or larger-scale revisions that may necessitate a course redevelopment.

Further reading

Siemens, G., Marmolejo-Ramos, F., Gabriel, F., Medeiros, K., Marrone, R., Joksimovic, S., & de Laat, M. (2023). Human and artificial cognition. Computers and Education: Artificial Intelligence 3.

Features and Navigation

Ready for spring term?

Faculty resources: update syllabus, order textbooks, schedule exam proctoring, course rollover. See checklist.

We offer financial support to develop and refresh online programs and courses. Submit a course proposal or a new program proposal.