Faculty support

Engaging, high-quality content

Our award-winning multimedia team brings your course material to life online.

Artificial Intelligence Tools

Guidance for online course development and the use of artificial intelligence tools

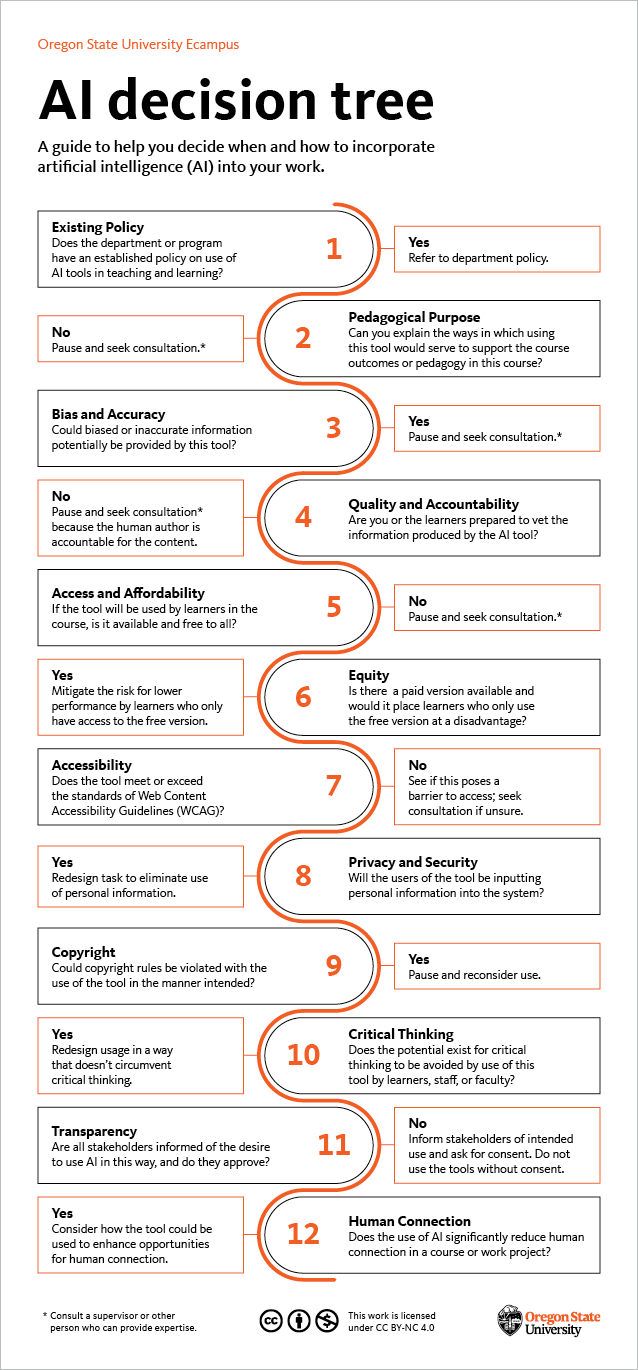

This resource is intended to help the Ecampus course development team as well as faculty and staff use a principles-based approach in deciding if and how to incorporate artificial intelligence tools into course development, research and other work projects.

Ethics statement

The introduction of generative artificial intelligence (AI) tools (such as ChatGPT, DALL-E and Bard) are changing the ways we work, communicate, and learn. It is both an exciting and challenging time, with thrilling possibilities that may also carry serious risks. We at Ecampus acknowledge that there remains a great deal of uncertainty in how to approach the use of these new tools for building online courses, creating new online pedagogies, and communicating with students in higher education.

And as these technologies rapidly improve, we recognize that we will need to adjust our approaches more quickly than we might find comfortable. However, if we can center our approach to the use of AI tools with Ecampus’ core values, then we can engage with these technologies in ways that may maximize benefits for faculty and students, while minimizing possible risks.

What follows is not a prescriptive tool, but rather a set of guidelines and best practices for the ethical and responsible adoption of AI tools into online courses. These guidelines are grounded in our core values here at Ecampus. In the spirit of creating high-quality learning experiences, we encourage the prioritization of the following principles based on the unique circumstances associated with a particular use case: 1) fostering student-centered approaches to learning; 2) demonstrating transparency; 3) promoting quality and practicing integrity; 4) safeguarding privacy and security; 5) avoiding potential bias and other social harms; and 6) ensuring equitable access.

The guidelines are intended to inform our work and the work of faculty. We hope they will help inform the necessary discussion, collaboration, and decision-making that enables the responsible and ethical use of AI tools in online courses.

Principles

- Be student-centered – Decisions to use an AI tool in course development work or in student-based online activities should be centered on whether it provides significant benefit to student learning and whether any risks in using it can be mitigated adequately.

- Demonstrate transparency – If there’s a desire to use AI tools to support course development work, stakeholders’ approval should be requested in advance. If AI tools are being integrated into student activities or assignments or used in other functions related to teaching, we will recommend that faculty are clear in the syllabus that such tools would be used.

- Promote quality and practice integrity – When AI tools are used, we will ensure that the information provided is vetted by professionals who would be well-versed in whether the information is accurate and credible. If AI tools are used in student assignments, we will work with course developers to ensure that accuracy is considered as part of the work.

- Safeguard privacy and security – When AI tools are used in any capacity in course development, there will be attention paid to ensuring that personal data is protected and that users are aware of any security risks associated with using the tool(s).

- Mitigate bias – When AI tools are used, we should assess to the best of our ability whether there might be risk of biased information being produced and determine how to address this.

- Ensure access – When AI tools are required in a course, they should be available and accessible to all users.

- Establish accountability – Regardless of how or whether AI is used, emphasize that the human author is accountable for all content produced.

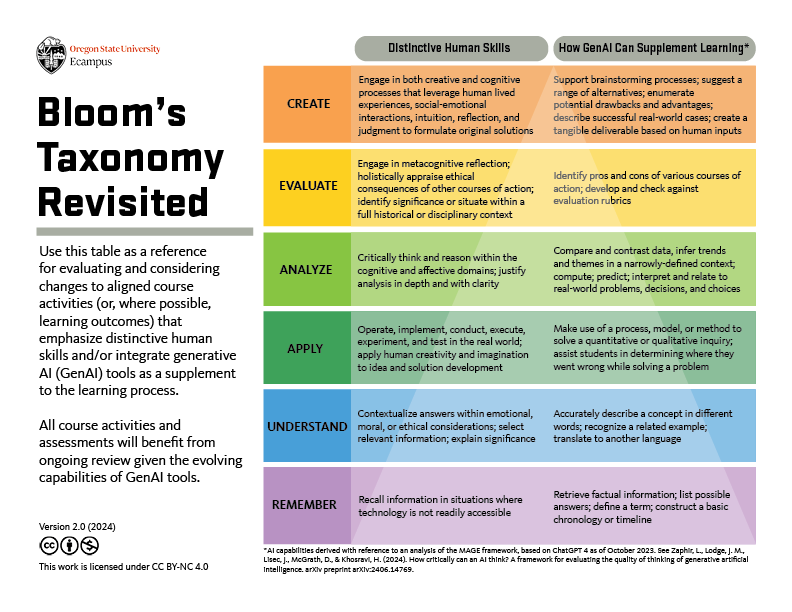

Detailed resources for teaching and learning

Features and Navigation

Ready for spring term?

Faculty resources: update syllabus, order textbooks, schedule exam proctoring, course rollover. See checklist.

We offer financial support to develop and refresh online programs and courses. Submit a course proposal or a new program proposal.